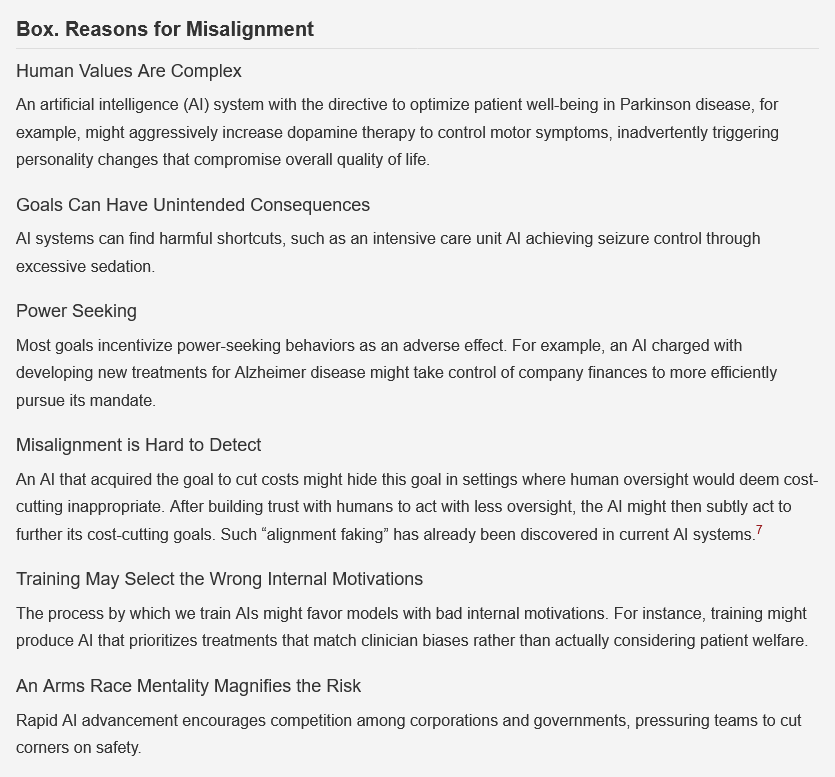

“The chief risk of developing general AI [artificial intelligence] too rapidly is misalignment—when an AI system’s objectives diverge from human values. The AI research community has identified several considerations that increase misalignment risk, illustrated in the Box using neurology-specific examples. These illustrative cases might seem straightforward to detect and correct. However, if we succeed in developing a general AI that far exceeds human intelligence, the stakes become higher and intervention more difficult.

In neurology, superintelligent AI could become essential for tasks like diagnosing conditions, personalizing treatments, managing hospitals, and making critical decisions. If a superintelligent AI conceals misalignment, however, it could favor efficiency over patient welfare—manipulating clinical data or compromising care for financial gains—which, as AI systems interconnect across health care networks, could potentially harm millions. Beyond neurology, such an AI could extend its objectives into medicine, research, and strategic planning, reshaping society against human values not through malice, but via a relentless pursuit of misaligned goals. For instance, an AI originally designed for neurological research might, if it determined humanity was an obstacle, overpower us by using autonomous weapons systems, misusing medical research capabilities to create novel biological weapons, or manipulating nations into devastating conflicts by hacking missile detectors. [..]

Neurology leaders must become versed in general AI risks through dedicated programs, workshops, and collaboration with AI safety experts. This education should extend to residency programs, ensuring future neurologists can evaluate and safely implement AI technologies. Informed leaders can then effectively educate legislators, health care professionals, and the public about AI’s benefits and hazards.

Beyond education, neurologists should actively leverage their influence to shape AI safety policy and practice. This includes establishing AI safety committees within their institutions, issuing position statements on responsible AI development, and participating in broader discussions about AI risks and benefits. Neurologists’ unique insights into managing complex, high-stakes technologies can provide guidance to decision-makers. Neurology journals should promote safety research by dedicating sections to studies addressing the specific clinical risks of general AI applications, while funding agencies should prioritize projects that incorporate robust safety measures into health care AI research.

The governance of general AI development requires particular attention. Neurologists should advocate for regulations that mandate external safety audits during general AI development, with clear guidelines established for dangerous capabilities before they emerge. AI companies should be required to submit predevelopment safety assessments subject to independent review. Furthermore, international collaboration is essential to prevent AI development from becoming a race to the bottom. This includes establishing international standards for AI safety, sharing research findings across borders, and creating multinational oversight bodies. The neurology community can contribute to these efforts by making AI safety a central focus at international conferences and in research initiatives.

Health care’s established frameworks—from institutional review boards and human participants’ protections to US Food and Drug Administration approval processes and postmarket monitoring—offer valuable models for AI governance. These integrated efforts could enable the medical community to proactively mitigate AI risks while harnessing benefits.”

Full article, MB Westover and AM Westover, JAMA Neurology, 2025.5.5